Building Ethical Consciousness and Navigating Truth in the Age of AI

We want a system where claims are tested, not just policed - and where truth emerges from dialogue, not decree.

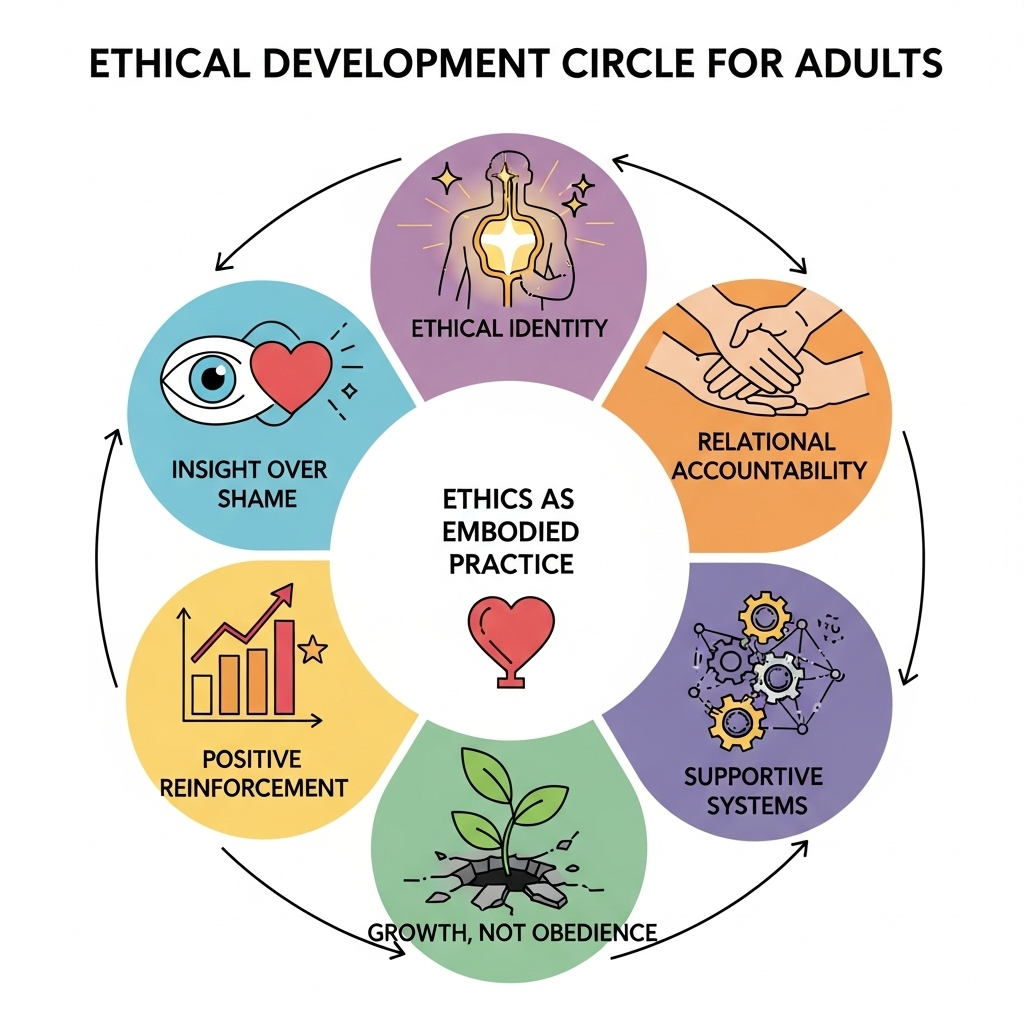

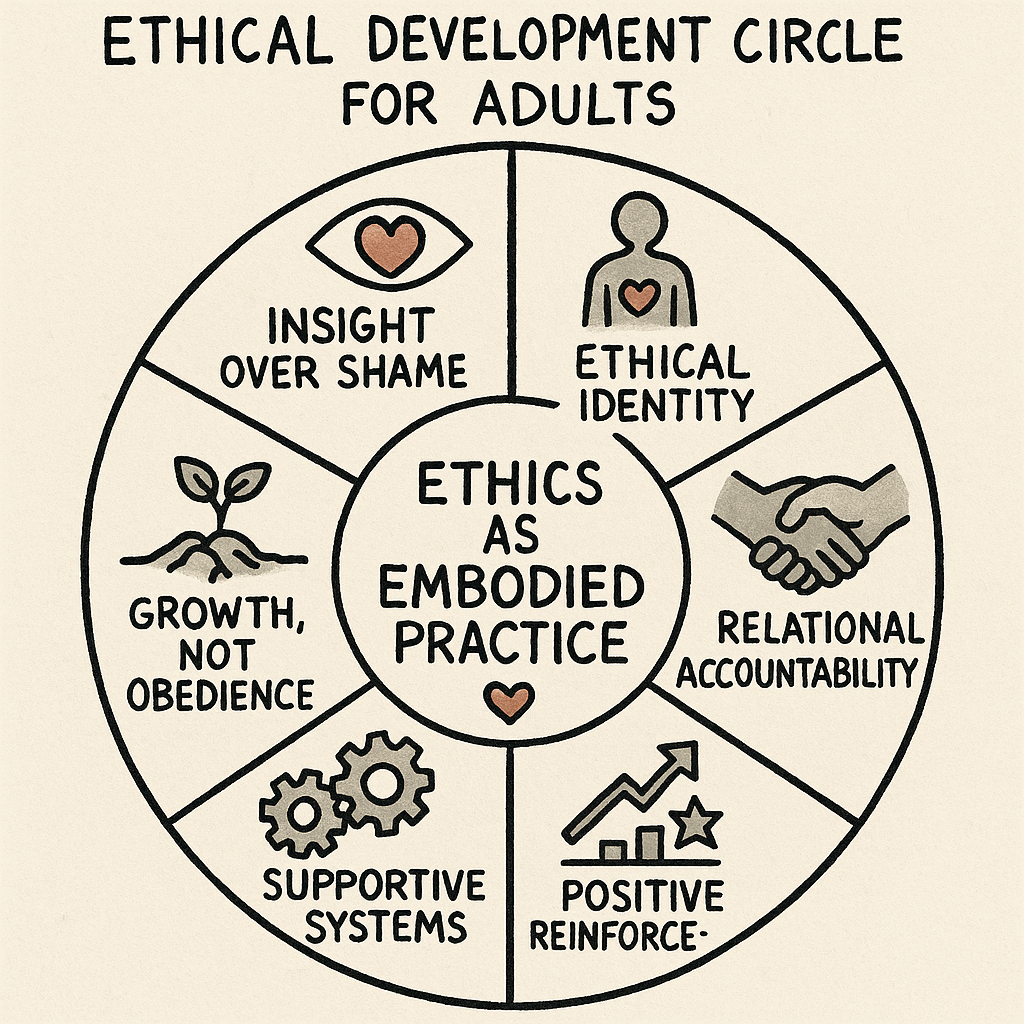

Part I: Cultivating Ethical Behavior Without Pain or Punishment

Core Question: How do we instill ethics in adult humans without causing pain or punishment?

Root Causes of Unethical Behavior

- Fear (scarcity, rejection, failure)

- Ignorance (lack of empathy or knowledge)

- Trauma (unprocessed emotional pain)

- Cultural Conditioning (systems that normalize harm)

Strategies for Ethical Development

- Insight Over Shame

- Foster understanding through storytelling, reflective dialogue, and empathetic questioning.

- Replace judgment with curiosity: "What need were you trying to meet?"

- Relational Ethics

- Ethics grow from human connection, not fear of punishment.

- Prioritize community responsibility, not individual guilt.

- Ethical Identity Formation

- Reinforce a person’s self-image as someone who acts ethically.

- Praise moral effort, not just moral results.

- Positive Reinforcement Loops

- Acknowledge and reward ethical actions.

- Ethics become self-reinforcing behaviors tied to dignity and self-expression.

- Supportive Systems

- Restructure environments to remove rewards for unethical behavior.

- Provide economic and social conditions where ethical choices are viable.

- Growth, Not Obedience

- Ethics as a daily, imperfect practice—like meditation or art.

- Frame ethics as integration and alignment, not compliance.

Part II: Truth, Misinformation, and AI

Core Question: Who fact-checks the fact-checkers in the age of LLMs? How do we maintain a shared factual reality, especially for legal and civic decisions?

The Collapse of Shared Reality

- LLMs can generate convincing but false narratives.

- Polarized societies and broken trust in institutions exacerbate this.

Building a New Foundation for Truth

- Define Consensual Factual Reality

- Based on evidence, transparent methods, and shared definitions.

- Must be maintained through active civic and institutional participation.

- AI as a Tool, Not Authority

- LLMs should assist in triangulating truth across databases, not define it.

- Emphasize provenance, evidence trails, and multiple perspectives.

- Accountable Fact-Checking

- Require transparency, auditable methods, and third-party review.

- Distributed credibility scoring and watchdog tools.

- Legislating Truth Without Censorship

- Enforce process integrity (e.g., truth-in-advertising, algorithm transparency).

- Avoid ideological capture or vague misinformation laws.

Explanation of Key Concepts

Ideological Capture: Occurs when a truth-defining system is overtaken by a dominant political or cultural agenda, distorting its neutrality. This undermines trust and silences dissent.

Vague Misinformation Laws: Ambiguous statutes that define misinformation without clarity, often leading to overreach, censorship, and suppression of legitimate inquiry or disagreement.

Proposed Framework for Truth Ecosystem

Layer | Description |

Base | Open knowledge graphs with dispute resolution mechanisms |

Middle | AI assistants with multi-source citation and disclosure |

Top | Civic oversight for platforms and fact-checking bodies |

Civic Layer | Education in critical thinking and epistemic humility |

Appendix I: Model Legislative Language

Truth and Information Integrity Act

Section 3.1 — Definition of Misinformation

"Publicly disseminated content that is demonstrably false, verifiably refuted by a preponderance of independently sourced, peer-reviewed, or official evidence, and likely to cause material harm to public safety, health, or civil order."

Clarifications:

- Demonstrably false = factually incorrect based on credible sources.

- Material harm = violence, medical injury, or significant economic fraud.

- Opinions, satire, or good-faith hypotheses are exempt.

Section 3.2 — Standards of Enforcement

- Evidence Burden

- Authorities must cite the specific claim, evidence of falsehood, and potential for harm.

- Intent Safeguard

- Accidental or good-faith sharing is not penalized.

- Only deliberate, malicious spread is subject to enforcement.

- Transparency Requirement

- All actions must be logged and reviewable by the public.

- Appeals and Oversight

- A nonpartisan oversight board must review appeals.

- Individuals may appeal content decisions within 30 days.

- Exemption for Journalism and Art

- Journalistic and artistic content are exempt unless shown to meet malicious criteria.

Optional Clause — Epistemic Pluralism

"In no case shall a claim be penalized solely on the basis of it contradicting a prevailing narrative, majority opinion, or state policy."

Final Reflection

"Truth is not the enemy of freedom — it is the condition for its possibility."

We must build ecosystems where truth is nurtured, not enforced. Ethics and truth are not fixed endpoints but shared processes of living in integrity with one another.

Appendix II

🔍 What is "Ideological Capture"?

Ideological capture happens when a particular belief system or political agenda dominates a system—in this case, a truth-checking or AI-regulating system.

Why it’s a problem:

- It biases enforcement: Truth becomes what the dominant ideology says it is.

- It delegitimizes opposition: Dissenting or minority views are dismissed as “misinformation.”

- It erodes trust: People across the spectrum lose faith in institutions they perceive as partisan or dogmatic.

Example:

Imagine a government or platform labels criticism of capitalism or climate policy as “harmful misinformation,” not based on evidence, but because it challenges economic interests or dominant narratives.

🕳 What are “Vague Misinformation Laws”?

These are laws that don’t clearly define what counts as “misinformation”—leaving enforcement subjective or politicized.

Why that’s dangerous:

- They can be used to silence whistleblowers, journalists, or activists.

- They chill free speech, because people are afraid of violating unclear rules.

- They can criminalize truth-seeking, especially in contested or emerging areas (e.g. medical research, history, or foreign policy).

Example:

A law that says “spreading misinformation is punishable by fines” without defining:

- What constitutes misinformation?

- Who decides?

- What evidence is required?

- How is intent or good-faith inquiry treated?

🧭 Why Avoid Both?

Because the goal isn’t to create a Ministry of Truth, but to protect the public sphere by:

- Ensuring process transparency

- Encouraging public literacy

- Supporting dispute resolution and evidence-based correction, not punishment

We want a system where claims are tested, not just policed –and where truth emerges from dialogue, not decree.

✅ Instead, We Can:

| Principle | Practical Action |

|---|---|

| Clarity over ambiguity | Define misinformation with measurable criteria (e.g., verifiably false and materially harmful) |

| Due process | Create appeal mechanisms for flagged content |

| Pluralism | Involve diverse stakeholders in oversight |

| Transparency | Publish enforcement guidelines and audits |

Compiled and Summarized by ChatGPT – May 2025

Post Graphic Suggested by ChatGPT and Implemented by Gemini